Learn How To Use Data to Build and Grow Hit Games - Part 2

This is the second and the final part of the series on how to build and grow products with data (you can find the first part here). This two part series is written by, Oleg Yakubenkov, CEO @ GoPractice. Oleg has built his data driven product management expertise working on some of the biggest games as well as some of the biggest social platforms in the World.

Check out Oleg’s learning platform, GoPractice. The platform offers an absolutely unique approach for training your product management chops, whether you’re you’re currently working as a product manager or training to be one.

GoPractice is an online simulator course that will give you hands-on-like experience working on an ambitious product and making decisions based on real data in actual analytics systems.

All of our readers will get 10% by following this link or mentioning “DoF” as a reference.

To make sure you don’t miss on all of the 🔥content coming out in the future, please do subscribe to the Deconstructor of Fun infrequent but powerful newsletter.

#1 How to make an analytic global launch decision

So, you have soft launched your game. Now you’re trying to improve its key metrics: you release a new version every few weeks, run A/B tests, measure the impact of the product changes. But at what point will your game be ready for a global launch?

Too often, in my experience, the decision to launch globally is based on intuition. Truth be told, there is no single proven way to make this decision. The publisher/developer usually base their launch decision on a hypothesis of the scalability of user acquisition. In my opinion, there are some other elements to consider.

Mobile gaming market is close to what an economist would call a perfectly competitive market (except for some categories such card and fighting games - looking at you, Herthstone and Contest of Champions). What that means is that if your product is not significantly better than an average product in the same category, there is little chance to get a significant market share. Simply put, there has to be a reason for a player to switch.

Here are some signs that your game is outperforming the market:

Services like Appsflyer can help you figure out how you stack against your competitors in the category.

Your game’s retention is better than the retention of similar titles in the sub-category. You can easily find the benchmarks from various service providers. Here are the ones from the recent report from Appsflyer.

Your LTV is higher than LTV of other games in your sub-category. This of course means that either you retain users better, or you found a better way to monetize them. You can estimate LTV of competitor games with the help of App Annie.

You CPI is lower than the competitors’ at least for some segment of audience. That is usually the case when you found an unfulfilled need on the market or more effective way to acquire users.

If the factors described above fit your game, then most likely you will find distribution channels with ROI > 0. If you target organic traffic sources you are likely to win the competition because app stores rank apps based on the value they generate. In the end, it’s quite simple. If you outperform your competitors you will eventually get to the top ranking position. Such games are more likely to get featured and supported by the app stores and are more likely to start getting users from word of mouth.

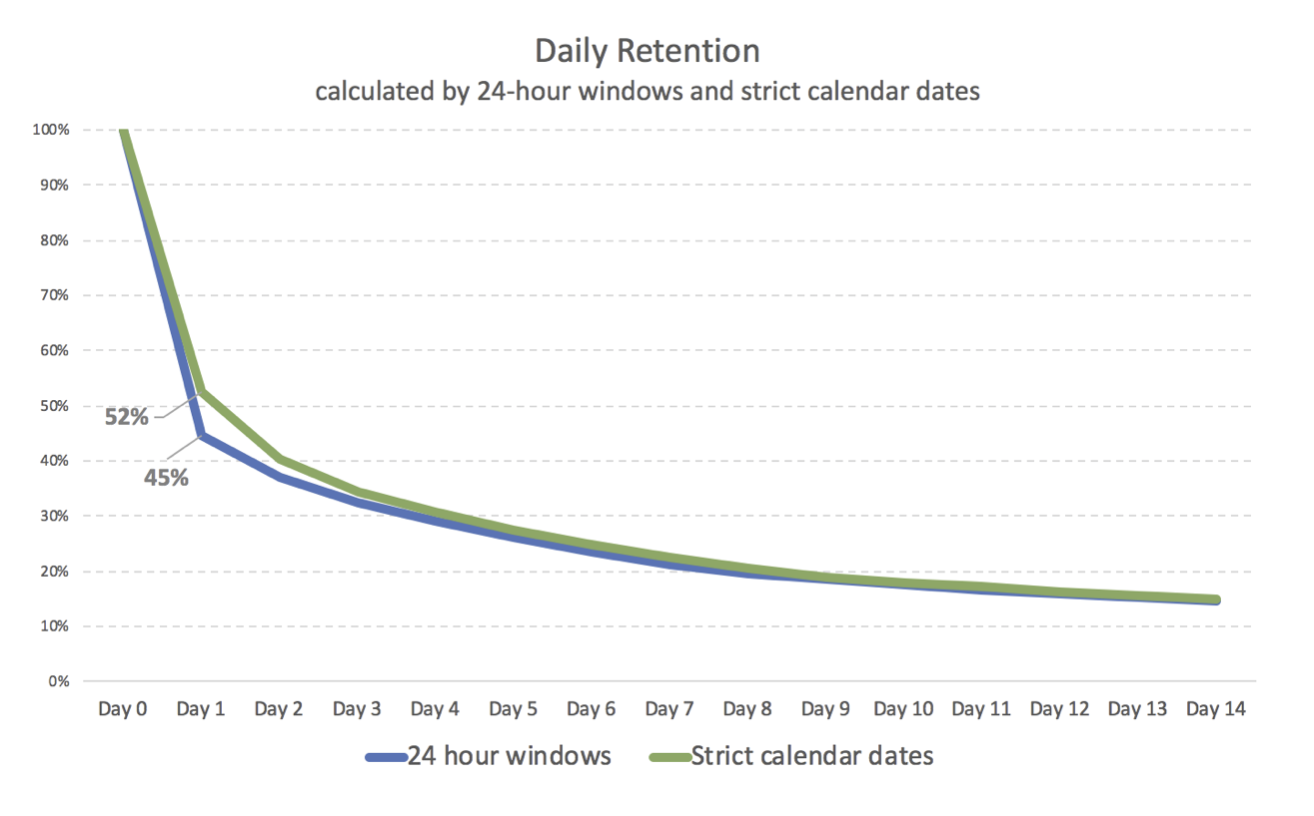

The important thing is to always compare apples to apples. Sometimes people talk about retention rate while what they really mean is rolling retention. Another common misunderstanding is that there are several ways to calculate retention rate: some use calendar dates while others go with 24-hour windows. Amplitude, for instance, calculates retention rates based on 24-hour windows by default, while Appsflyer and some other analytics systems calculate retention based on calendar dates by default.

Here is an example of how big this difference can be when using different ways to calculate retention. On the graph below you can see the same product’s retention calculated based on 24-hour windows and calendar dates. D1 retention equals 52% in one case and 45% in the other. That is a huge difference!

In the graph above you can see the same product’s retention calculated based on 24-hour windows and calendar dates. D1 retention equals 52% in one case and 45% in the other. That is a huge difference. On the other hand, when it comes to long-term retention, KPIs that actually matter, the calculation method has a much smaller effect.

#2 Outperforming competitors is the name of the game

Here is another story about the game we made, Epic Split Truck Simulator. Quickly after the launch the game started getting organic traffic, but there was a specific change that had a dramatic impact on the game’s growth.

After changing the icon the number of organic downloads tippled from 5k up to 30k.

At some point we decided to change the app icon and the screenshots. Below you can see the new and old version of the icon (the new one is placed on the left):

After changing the icon the number of downloads sharply increased by several times: from ~5k to ~15k downloads per day. Few weeks later the game was getting over 30k downloads per day.

Here how the change of icons took place and affected the growth of the game:

On January 20, the team launched a new icon (left) for 100% of users.

After that, the number of downloads increased dramatically (almost threefold), and the team decided to check whether this had anything to do with the new icon or not.

On January 26, the experiment was launched: 50% of the Google Play visitors were presented with the old icon, while the other 50% saw the new one.

On January 27, the team stopped the experiment because it had a negative effect on the number of downloads.

The new icon increased the page conversion rate by roughly 80%. However, the impact on the number of downloads was significantly greater (+200%).

According to the results of the experiment, it turned out that the new icon increased the page conversion rate by roughly 80%. However, the impact on the number of downloads was significantly greater (+200%). To understand the reasons of this discrepancy let’s discuss the model of game’s growth and how did the new icon impacted it on different levels.

Most new users were coming organically from Google Play. The main source of traffic was the "Games you might like" section on the main page of the app store. Such recommendations are personalised for each user. The new icon had a higher CTR, and this had an impact on the acquisition funnel at several levels, which entailed a significantly larger effect:

CTR (click-through rate) increased in the recommendation block on Google Play’s homepage thanks to the new icon. As a result, more users started visiting the game’s page.

Page conversion rate also increased.

Consequently, the improvement of download conversion rate on the main page mirrored that of the previous steps.

The above factors made Google Play recommend the game to new users more and more often.

Thus, one small change in the icon led to a threefold increase in the number of game downloads. As soon as we changed the icon the overall conversion from impression in the Google Play’s recommendations section into app’s download increased significantly. The game started getting much more organic traffic, and the Play Store started promoting it more.

This story illustrates the importance of having better metrics than your competitors’ and how it impacts the growth and visibility in the app stores. However, the main lesson here is that it is crucial to understand your product model, it’s growth drivers and its key growth loops. This understanding will help you focus your efforts on the most impactful areas.

#3 Monitor and investigate sudden changes

Time for one more story about King of Thieves. There is a special skill in the game that allows users to increase the gold they steal from other players by a certain amount. For example, if a player has been pumped up with a 10% skill, then stealing 1,000 gold will give him an extra 100 gold pieces. Players receive the additional gold from the dungeons they attack successfully.

Some players considered this unfair and complained a lot. The amounts of gold issued for the bonus weren’t very large, so the team decided to stop applying the penalty to defenders while still giving the attacker the bonus. They thought it would stop all the negative feedback from the community.

The hypothesis was that this minor economy change should have a positive effect on retention and avoid spoiling monetisation. However, the consequences of this small change were disastrous.

There were players who had already accumulated a lot of gold. Earlier, when they were attacked and robbed by other players, everything worked well. Their gold stashes was drained, other players were got rich, and the overall balance was maintained.

The new move disrupted this balance. The wealthy players who got attacked remained rich, and those who robbed got richer as well. As a result, the amount of gold in the game economy began to grow at an uncontrollable pace.

A small change in the economy caused a massive uncontrollable inflation in the game.

After a few days, the in-game’s prices became meaningless because users had amassed an absurd amount of gold. Retention rates decreased because players lost their interest in the game, and monetization became worse because users had no incentive to make purchases. And the volume of negative feedback, instead of declining, skyrocketed.

We have to remember that games are complex systems. Sometimes even small changes to a part of a product can have unpredictable effects on other parts. To be able to quickly understand what happens and to quickly fix unexpected consequences of product changes, you have to keep a close eye on the metrics visualizing all the key parts of your game. That’s why having thorough and detailed dashboards is crucial.

There is another and more important reason to regularly monitor the key metrics. No day is alike for a live game with tens or hundreds of thousands of daily active players. Every day, something new happens to your game. Today, you’re going viral on the mainstream media; yesterday you changed the keywords for your app in the app store; the day before, the team might have rolled out an update.

Each point on your dashboard's graphs represents the result of an experiment that the world (sometimes with your help) runs on your product. Each point on your dashboard’s graphs is an opportunity to understand your product and make it better.

I believe you need to become a researcher. Instead of asking the dashboard “Is everything OK?” ask “What caused this spike? Is there something I can learn from?” And at this moment, the changes that you used to interpret as mere accidents will push you to start learning new things about your product and your users.

Keep looking for “spikes.” Get to the very core of where they come from. Many of them be self-explanatory, such as one-off featuring in a specific country, and will hold nothing interesting to explore. But this doesn't mean you should stop researching. Some “spikes” will be caused by factors you haven't thought of before, factors that can impact the metrics of your product. And this is exactly what you need to keep an eye for: the levers that will help you grow the product forward.

#4 Incremental Improvements vs. “Bold Beats”

In my opinion, there is one key mistake that many teams make after scaling up their game: they underestimate the importance of consistent improvement of the game through marginal gains at different product levels.

Boom Beach’s Warships update is a perfect example of a “bold beat” update. An update that takes significant effort and introduces new ways to engage with the game.

In the early days of working on a new game teams tend to look for big wins that could significantly improve the key metrics. However, If you have done a great job at the soft launch stage, by the time of the global launch you should have already collected key information on what truly moves the needle as well as experimented with some big changes. Thus after the game has been scaled it’s time to dig deeper and start optimizing key product flows and funnels through rigorous experimentation.

Take this hypothetical example. Say you have five teams (or pods, as they are often called) working on different parts of the game from acquisition, onboarding, engagement, retention and monetization. Each team launches 10 experiments over a quarter. Four out of five experiments fails to deliver any positive impact. One out of five experiments proves successful bringing small improvements of 2% to 3% lift to a key KPI. Each improvement is small and doesn’t visibly affect overall metrics of the game. Yet if you combine all these tiny wins over several quarters, you will see a clear upward pointing trendline in the game’s performance which translates into a healthy and sustainable growth. In this particular hypothetical example there would be 10 successful experiments over a quarter. All of the improvements would be small but combined together they would increase the key metric by almost 30%.

Focus on incremental improvements does not mean that you should avoid bigger changes, which bring completely new elements and experiences to your game. This “bold beats” as they are often referred to need to have a prominent placement in the product roadmap and I merely advocate for the balance between low cost incremental improvements and much more demanding additional features.

Think of the first version of the iPhone and what it has become now. In many ways, the iPhone’s huge success was achieved due to the consistent improvement of the key systems of the device, like its camera, processor and battery. Such progress usually doesn't happen due to some sudden successful leap forward. It happens because of the cumulative effect of dozens or even hundreds of small improvements made at different product levels year after year.

Keeping focus on constant incremental improvements requires a data-driven culture and an experimental infrastructure that will make it easy for any of the teams (or pods) to launch experiments and measure the impact of these experiments to the product’s key metrics.

As another example, here’s the description of Uber’s experimentation platform. It truly shows how data driven Uber. Improving their product constantly is something Uber has to do to stay ahead of its agile and hungry competition. And while the competitors of Uber are very aggressive and motivated, I think the competition in mobile games is far more aggressive to point where not being data driven is a path to mediocrity at best.

Rapid and Constant Beats the Race

There are many ways how data can increase your chances of building and operating a successful mobile game. The key is to stop thinking of data as a way to look back at what you have done, but instead start using data as a tool that can help you make decisions, decrease uncertainty and remove main product risks as early as possible.

Experiment often and experiment early - even before you actually start the development. And never underestimate the importance of consistently improving the product through marginal gains. The quality of such work is the factor that often separates the best products from those that lag behind.

Hope you enjoyed the series. Let us know what you thought via Twitter, Facebook or email. We love hearing from you!

ps: do check out GoPractice, and absolutely fantastic platform to make your organisation, and yourself, more data driven. We at Deconstructor of Fun did the course and absolutely loved it!

pss: don’t forget to claim your free month with GeekLab. Arguably the best platform to test your marketability!

Geeklab is your go-to solution to analyze and create AppStore and Google Play product pages.

editors note:

I’ve personally used several different services to test marketability and Geeklab has been by far the most accurate and cost efficient of them all. During the writing of this post, we connected with the folks behind Geeklab and they agreed to offer a free month to their service for all of our readers. That’s how confident they (and we) are in their platform.

Use this link to get your free month and start testing themes!